Inrodaction

In 2012, Knight Capital Group updated the software on their trading platform. The system started acting strange, making trades that weren't planned for within minutes. That bug cost them $440 million and almost put the company out of business in the 45 minutes it took them to find the kill switch. This failure was not caused by a single “missed test.” The software's release and validation processes were the source of the breakdown. This example now serves as a case study of what occurs when actual production risks are not taken into account during testing and release procedures.The reality is that most bugs won't cost you anywhere near that much, but they will cost you something: revenue loss, customer trust, development time.

There are dozens of testing types out there, and everyone has different opinions. While some people vouch for test-driven development, others find it impractical. Some teams automate aggressively, while others still rely on manual testing where it makes sense.

Instead of adding to that debate, this guide focuses on what actually matters: which testing strategies and types are useful in practice, what problems they’re good at catching, and when they’re probably not worth the effort.

What Is Software Testing

Software testing is the process of checking whether a system behaves a certain way under real conditions. It's not just about finding bugs or proving that something works once. Testing looks at how software handles everyday use, edge cases, mistakes, and changes over time. In terms of practical application, testing matches requirements with reality. Testing allows teams to verify that they’ve built the right solution and that it works as intended. Good testing looks at both the technical side and how real users interact with the system in practice.

Types of Software Testing

Software breaks in different ways and for different reasons. A feature can work perfectly on its own and still fail once it’s connected to other parts of the system. A change that looks harmless can quietly break something that already worked. Different types of software testing exist to catch these problems at the right time, before they turn into production issues or user-facing failures.

Black Box Testing

Black box testing focuses on what the system does, not how it’s built. Testers interact with the application by providing inputs and checking outputs against expected results, without any knowledge of the internal code. This approach mirrors real user behavior and is especially useful for validating requirements, workflows, and edge cases that developers may not anticipate.

White Box Testing

White box testing focuses on the application to verify how the code works. It checks logic paths, conditions, loops, and error handling to ensure all critical branches are exercised. These tests help uncover hidden issues like unreachable code, incorrect assumptions, or unhandled scenarios that may never surface through user-facing tests alone.

Unit Testing

Unit tests are used to break down an application into the smallest level of testable pieces, such as a function or a method. Each unit will then be run in isolation in order to make sure the unit has the expected output. Unit tests are very quick-running tests and are used in order to ensure a stable application.

Integration Testing

Integration testing checks how different modules, services, or APIs interact once they are connected. Sometimes, even when individual components work correctly on their own, problems often arise at integration points, such as data mismatches or communication failures. These tests help identify issues that only appear when systems depend on each other.

Functional Testing

Functional testing verifies that each feature of a software behaves according to defined requirements. It focuses on business logic and expected outcomes rather than technical implementation. This type of testing helps make sure that what was built aligns with what was requested, making it especially important for feature validation and regression coverage.

System Testing

System testing validates the whole application in an environment that closely resembles production. It verifies that all components work together as expected if the system meets both functional and non-functional requirements. This testing helps catch issues that can only appear when the full system is in place.

Acceptance Testing

Acceptance testing determines if the software is ready to be delivered to the users. It verifies the system from a business and a user perspective, and it often involves stakeholders and product owners. The focus is on confidence, verifying that the software meets expectations and supports real-world use.

Regression Testing

Regression testing verifies that the recent changes have not caused any new issues with existing functionality. As software evolves, even small updates can have unintended side effects. Regression testing acts as a safety net, helping teams move faster without constantly rechecking the same areas manually.

Performance Testing

Performance testing assesses how the system responds to varying loads. As usage rises, it considers response time, resource consumption, and overall stability. These tests prevent failures during demand spikes and help teams understand system limitations.

Security Testing

Security testing focuses on protecting the system and its data from threats. It finds defects like exposed data, exploitable inputs, and poor access controls. This type of testing is critical for reducing risk and ensuring the application can withstand real-world attacks.

Software Testing Strategies

While testing types state what you test, a testing strategy explains how you approach testing overall. It is the thinking behind the work. A testing strategy helps the team in deciding where they need to focus more, what risks matter most, and which testing types would actually make sense for the product and stage they're in.

A software testing strategy sets priorities, outlining what should be tested first, what can wait, and what requires deeper consideration. The majority of teams don't just use a single strategy. Rather, they combine multiple strategies based on the system, the risks, and how the software is built and released.

Below are some of the most common testing strategies and how they’re typically applied in practice.

Static Testing Strategy

A static testing strategy focuses on identifying problems without executing the software. The goal in a static testing strategy is prevention rather than detection, catching issues early, when they're cheapest and easiest to fix. This strategy relies heavily on reviews and analysis instead of test execution.

Teams often review requirements, designs, and code together before anything is run. These conversations surface issues early, unclear acceptance criteria, mismatched requirements, or design decisions that could cause problems later. Finding these gaps before a test environment even exists saves time and rework. Code reviews serve the same purpose. They help catch logic errors, security risks, and code that will be hard to support or extend over time.

Static testing cannot replace dynamic testing, but it does reduce the number of defects. Teams that invest time in static testing often see fewer surprises later in the cycle, especially in complex systems where fixing issues later can be costly.

Structural Testing Strategy

A structural testing strategy focuses on the internal workings of the software. It looks at how the system is built rather than how it appears to users. This strategy is tied to the codebase, and it is usually applied in early stages and continuously during the development phase.

Unit testing, code-level integration testing, and white box testing are examples of a structural testing strategy. These test types validate logic paths, data handling, error conditions, and interactions between internal components. The goal is to make sure the system operates reliably under controlled conditions and is technically sound.

Structural testing helps teams build confidence in the foundation of the software. When the internal logic is reliable, higher-level testing becomes more effective. Without this strategy, teams often rely a lot on end-to-end tests to catch issues that should have been identified much earlier.

Behavioral Testing Strategy

The behavioral testing strategy focuses on how the system behaves on the outside. It doesn't concern itself with how features are implemented, only if they work as expected. This approach aligns closely with the needs of the user and business requirements.

Black box testing, functional testing, system testing, acceptance testing, and regression testing are commonly used testing types in this strategy. These tests validate workflows, data processing, and feature outcomes based on the expected behavior.

Behavioral testing plays a key role in making sure the software delivers real value. It confirms that features behave as expected, continue to work after changes, and support the core workflows users rely on. This is often where issues with the greatest impact on users come to light.

Front-End Testing Strategy

A front-end testing strategy focuses on the parts of the system that users interact with directly, including layout, navigation, responsiveness, accessibility, and cross-device and cross-browser behavior. Front-end testing also overlaps with performance testing when page load times or client-side responsiveness are important. Although it is often grouped under functional testing, front-end testing deserves its own focus because UI issues can quickly damage user trust.

Front-end testing makes sure the application works the way users expect it to. Even when the back-end is stable, small interface issues can make the product feel unreliable. Paying attention to the front end helps teams catch problems that deeper technical tests usually miss.

What Is the Best Software Testing Strategy

There is no single strategy that is ideal for every situation. What makes sense for one product or team might not be as useful for another. The right approach depends on factors like the complexity of the system, how often the system changes, and what happens if something breaks in production.

A small internal tool carries very different risks than a public-facing application used by hundreds of people. Most teams end up mixing several strategies and adjusting them over time as the product grows. The goal is to focus the testing effort where it actually reduces risk.

Key Elements to Consider When Choosing a Software Testing Strategy

Choosing a testing strategy is not about following a framework or copying what other teams are doing. It's about understanding your product, your risks, and the issues you are working with. A strategy that works well for one team might not work for another. Before deciding on a strategy, it helps to take a few practical factors into account that shape how testing should be done.

Product Complexity and Risk

Start by figuring out how complex the system is and what is at stake if something fails. Software with many integrations, sensitive data, or strict requirements needs more consistent testing. Simpler tools with limited users can often get by with a lighter approach. The higher the risk, the more careful the testing should be.

Frequency of Change

How often the product changes has a big impact on testing. Teams that ship updates frequently need strategies that support fast feedback, such as strong regression coverage and reliable automation. Products that change less often can offer more manual efforts. The main goal is to make sure that testing keeps pace with development rather than slowing it down.

Team Skills and Structure

A testing strategy also has to align with the people executing it. A team with strong automation skills can depend more on code-based tests, while teams with limited resources can rely more on manual and exploratory testing. Cross-functional teams also tend to share responsibilities, which also impacts where and how testing happens.

Time and Resource Constraints

Testing time is limited. Deadlines, staffing, and budget—all add up. A good strategy acknowledges these limits and prioritizes testing efforts instead of trying to cover everything. It's better to test the most critical areas well than to test everything poorly.

User Impact and Business Goals

All features have different importance to users and the business. Core workflows, revenue-related features, and high traffic areas deserve more attention than edge features. Aligning testing with business goals helps teams focus on issues that actually matter once the software is being used.

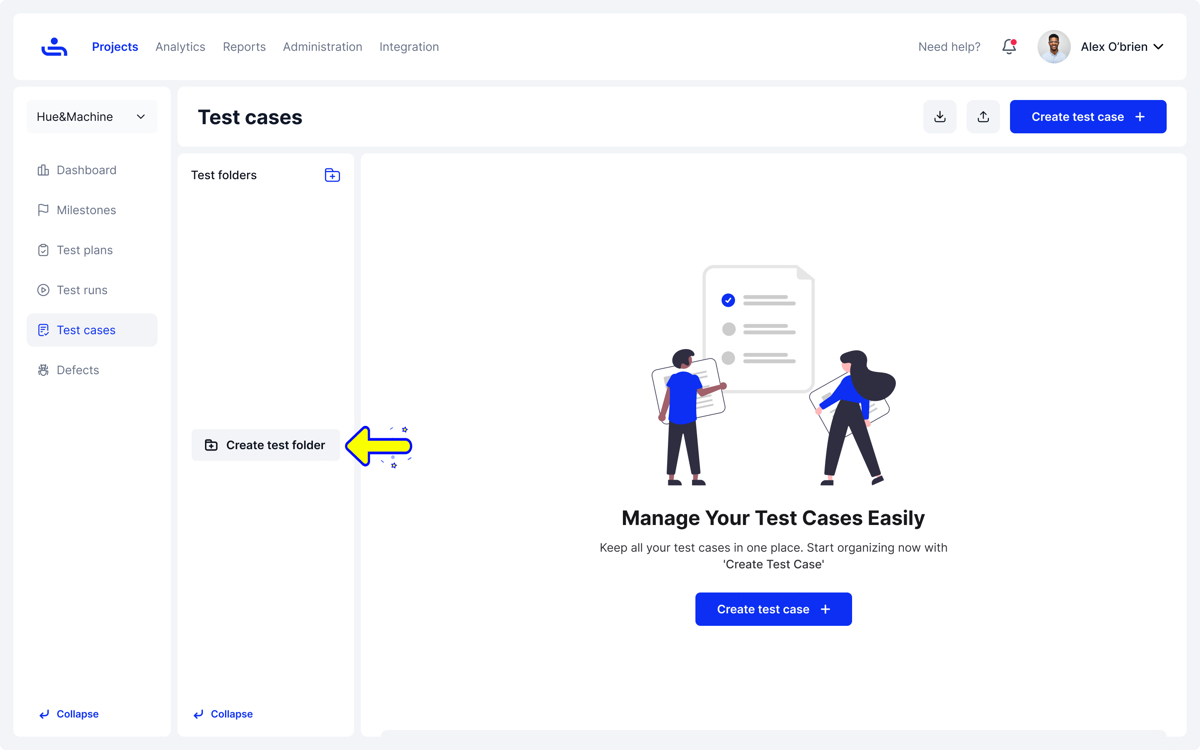

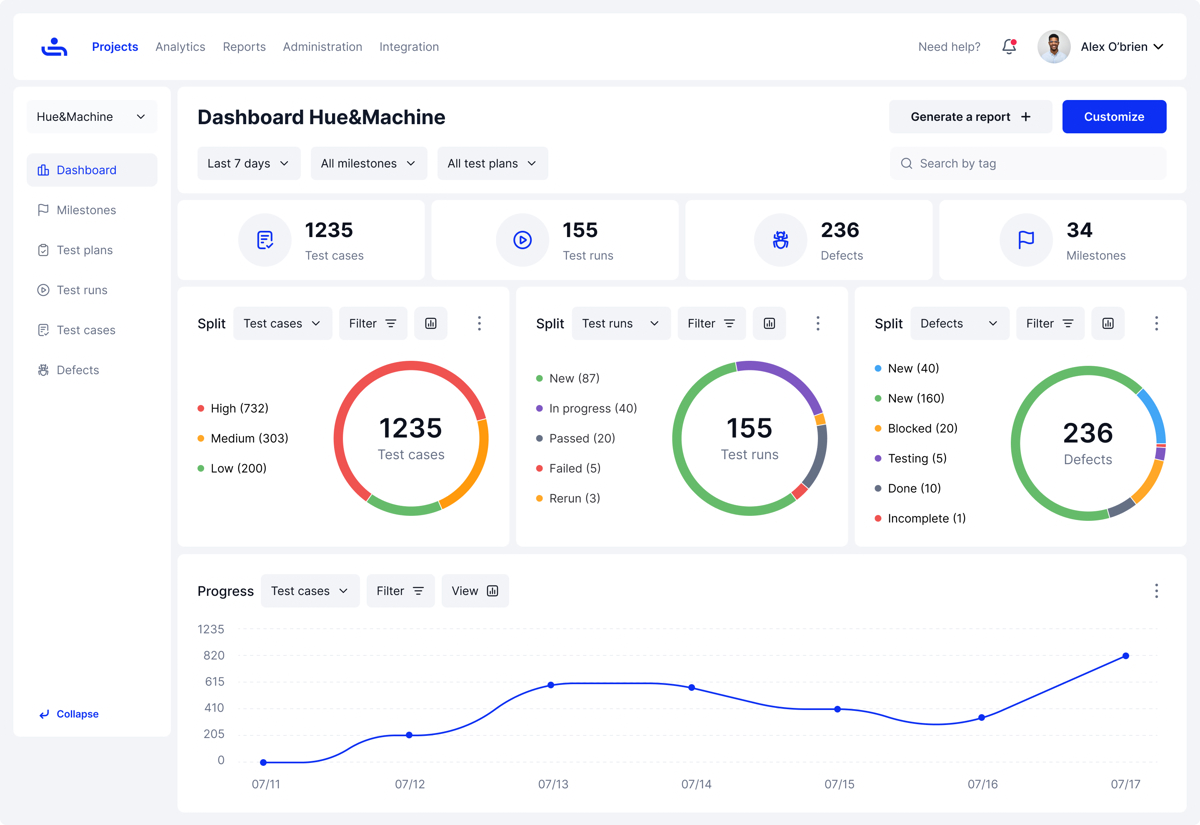

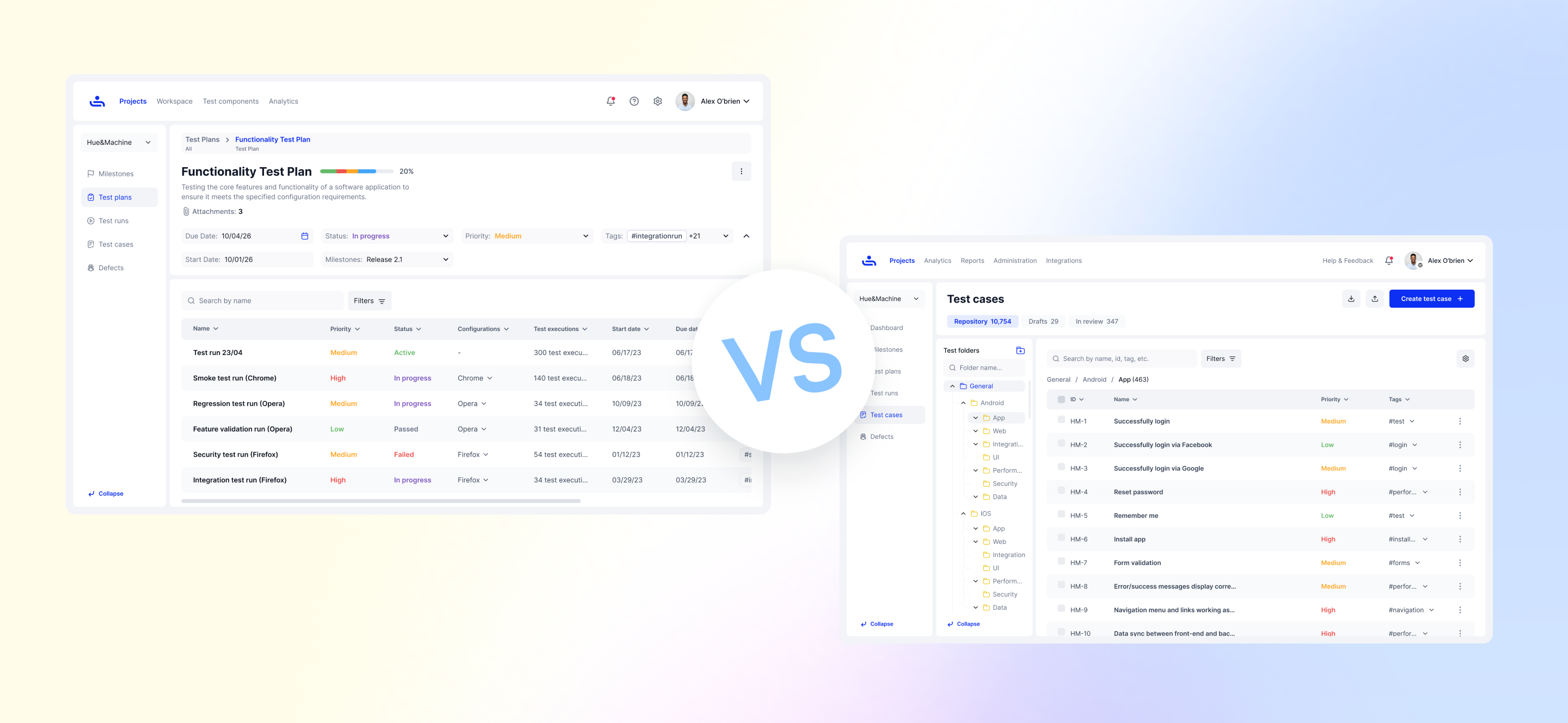

Using TestFiesta for Software Testing

Testing strategies only work if the tools supporting them don’t get in the way. That’s where TestFiesta fits in. It’s designed to support different testing strategies without forcing teams into a rigid structure or workflow. Whether you’re focusing on behavioral testing, structural coverage, or a mix of approaches, TestFiesta lets teams organize test cases in a way that reflects how they actually work.

Features like tags, reusable steps, and custom fields make it easier to adapt testing as products evolve. Instead of rebuilding test suites every time priorities shift, teams can adjust how tests are grouped, executed, and reviewed. This flexibility supports both fast-moving teams and those working on more complex systems, without adding unnecessary overhead. The goal is to support the testing strategy that makes more sense for your product.

Conclusion

Software testing doesn’t have a universal formula. The most effective testing strategies are shaped by real constraints, product complexity, team skills, release pace, and risk. Understanding the different types of testing and how they fit into broader strategies helps teams make better decisions about where to focus their effort. When testing is intentional and aligned with how software is built and used, it becomes a strength rather than a bottleneck.

FAQs

What is a test strategy in software testing?

A test strategy is a high-level plan that explains how testing will be approached for a product. It outlines what will be tested first, where effort should be concentrated, and how different types of testing fit together. Instead of listing individual test cases, it focuses on priorities, risks, and practical constraints.

What is the 80/20 rule in testing?

The 80/20 rule in testing suggests that a large portion of issues usually comes from a small part of the system. In practice, this means a few features, workflows, or components tend to cause most problems. Teams use this idea to focus their testing efforts on high-risk or high-usage areas instead of trying to test everything with equal measure.

What are some common software testing strategies?

Common strategies include static testing to catch issues early, structural testing to validate internal logic, behavioral testing to confirm user-facing behavior, and front-end testing to ensure the interface works as expected. Most teams don’t rely on just one strategy. They combine several approaches based on the type of product they’re building and how it’s delivered.

Which software testing strategy is good for my product?

The best strategy depends on your product’s risk, complexity, and pace of change. A fast-moving product with frequent releases may need strong regression and automation support, while a simpler or early-stage product might benefit more from focused manual and exploratory testing. Team skills, timelines, and user impact also matter. The right strategy is the one that helps you catch the most important problems without slowing development down.